Background

One of the the most important things in the Software Engineering world is the ability to automate your tests properly against your database, and by this I mean your actual database. Something that can startup your database for you incase it's not running and then also run the tests and finally shutdown your database when the tests are done.

In my experience I have seen developers introduce a bit of manual work to get the following going :

- Starting up the database before running tests.

- Clearing out database manually when done or writing something to do that.

- Sometimes, and I mean sometimes, shutting down the database when done with the tests.

One of the great solutions to this problem, is to use some nice "In-Memory" databases. This is great and has helped with automation of the key points mentioned above. No need to start the database and clear it out manually after running tests etc ...

Now in most cases the in-memory databases don't necessarily match up to the actual database you will be running in the Development, QA and Production environments, i.e, when using something like H2 IN -Memory Database, then we need to keep in mind that it's not really the MSSQL Server or Postgres DB that you are running on the actual environments. This means that you may be limited when testing something more intense, complex and database specific processes and operations.

Would it not be nicer to be able to test out against an actual database that we running on the various environments? I think it would be awesome.

So then one day I was with my Chief Architect & CEO discussing about putting a small system together, all the way from tech stack selection, frameworks and to do some RND. I was excited that I will finally get to put this tool to practice and see how it works. I wanted something that can have much like the In-Memory databases except it should be a real database we will be running on.

Things To Keep In Mind

Before we get started. If you will be checking out the article's code repository for reference then may be set up these tools before:

- Java 11

- Maven 3.6.2

- Docker

Otherwise this depends on some assumptions :

- You are familiar with docker.

- You already have a Java project that already has datasource connection component.

- Some understanding of maven.

- You have worked with JUnit, in this case we are talking about JUnit 5.

Context

Now that you are ready with the tools needed, we are going to go through a library named, Test Containers. This is a nice light weight Docker API of sorts. Through the power of JUnit 5 we will also be able to startup and shutdown the database automatically. The database for this article is Postgres. Test Containers supports a lot of database services, so you are not tied to Postgres database. Remember this also uses Docker meaning you can pretty much use Test Containers for anything else besides a database service, further more you can even play with some Docker Compose files. So this really opens a whole you avenue of possibilities in the Software Engineering world.

Getting Started

Adding Dependencies

If you check that home page there's a Gradle equivalent that you can try of you are using it. In our case you will need :

<dependency>

<groupId>org.testcontainers</groupId>

<artifactId>postgresql</artifactId>

<version>1.14.3</version>

<scope>test</scope>

</dependency>

<dependency>

<groupId>org.testcontainers</groupId>

<artifactId>testcontainers</artifactId>

<version>1.14.3</version>

<scope>test</scope>

</dependency>

Docker

For this to work you need docker to be running, then you don't have to worry about :

- The actual database service ...

- Nor the database container ...

- Or even, the container image.

Datasource Configuration

It's as simple as using the "Default" Test Container datasource configurations :

URL : jdbc:tc:postgresql:13:///test

Username : test

Password : test

Drive Class Name : org.testcontainers.jdbc.ContainerDatabaseDriver

Notice the "tc" inside the URL literal. That's how you know that it's a Test Containers url. The default database name is "test" by default, if you check the end of the url literal. This is the same with username and password.

So then we are almost there. Believe or not, that's all you need. Now the final piece. Some Java JUnit 5 code to test out the magic.

Java & JUnit

We are going to use the JUnit - @ClassRule, annotation to dow some work before before the the rest of the actual test loads. This is similar to the the @BeforeClass annotation. The reason we will be using this is to kick start our Docker container before the tests even get to run. So it's preparation for the actual test cases. So create a new test case for you existing Data Access code. Add this class field or variable in your code.

@ClassRule public static PostgreSQLContainer postgreSQLContainer = (PostgreSQLContainer) new PostgreSQLContainer("postgres:13");

Write some sample code to test this out. In my case I have something along the lines of ...

// Some code here

...

@Test

public void assert_That_We_Can_Save_A_Book() {

Book saved_lordOfTheRings_001 = bookDataAccess.saveAndFlush( lordOfTheRings_001);

assertNotNull( saved_lordOfTheRings_001 );

assertNotNull( saved_lordOfTheRings_001.getId() );

assertEquals( saved_lordOfTheRings_001.getTitle(), lordOfTheRings_001.getTitle() );

}So that's pretty much it. You can run your test cases and it should integrate into your database and store some information. We try to test this out.

Validating The Data Graphically

So we can look into it by debugging our test case with a break point immediately after the line that saves a database record and saves.

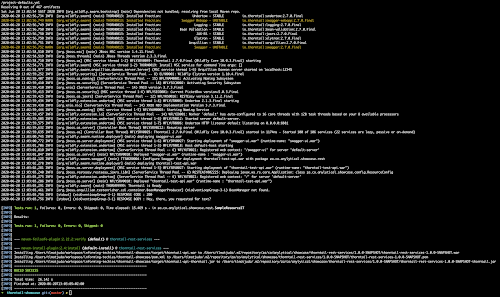

Start up any docker client of your choice and then. At this point you will not have have any Postgres docker container running unless you already were using Postgres in docker. The name of the container that will be run by your test cases will be a random name, there's no way you will miss it plus you can check out the image version on your docker client i.e. postgres:13.

So this is my docker client before running the tests. By the way the docker client I am using is Portainer.

So now we are going to run a test case and pause it just after saving to the database.

As you can see, my debug break point is in place and has paused just after saving to the database. Now let's go back to our docker client interface, Portainer in my case...

Notice the two new containers being created :

- testcontainers-ryuk-40abb5dc-... : it the test containers service running in there.

- eloquent_robinson : The more important one is your test database running, as you can see that it's a random name, notice the image, which is postgres:13 that's our guy right there. The port for this thread at this moment is 32769.

The port number is important because it's also random like the container name. This is by design according to the Test Containers engineers. For this Test Case run we are going to try to connect to the database while the debug is still on. So that we see our saved data. So get on to your Postgress database client and connect using the following properties :

- Username : test

- Password : test

- Database Name : test

- Port : 32769

The URL will be something like : jdbc:postgresql://localhost:32769/test

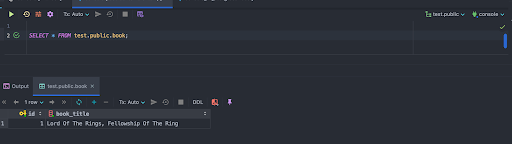

Connect and run a simple query statement to see your data, saved through that test case. You should get something like the one in the image below :

Ka-BOOM! There you go. You have not only managed to run a successful integration test, but also validated that it does indeed save your data to the docker hosted database, as you expected. Done, done and done!

In Closing

I hope this was fun and you have cases where this can help ease your software development processes.

Leave some comments below and you may checkout the GitHub Docker Database Test - Source Code for reference.