Context

Oops! Almost left one important thing, we had a view on how to "dockerize" your "Hollow JAR", but we did not focus on implementing some sort of an automated integration test for your web service. There are a lot of way one can perform this exercise. One of the recurrent practices is through the use of Postman followed by Postwoman.

For as long as your service is running then you can develop some really nice Javascript processes that can automate this for you through the tools mentioned above. Another way for traditional "Java" developers would be to go the "JUnit" route. At some point you will also need your service to be running somewhere somehow waiting for client requests.

The JUnit route later got improved for Java EE Integration Testing through the configuration and use of frameworks, Arquillian & ShrinkWrap as top ups to JUnit.

Arquillian & ShrinkWrap

The role that Arquillian plays is of a "middle-man" between an artifact (.jar .war .sar .ear) that you want to deploy and the container or application server you want to deploy to. There are two ways you can use deploy your artifact. Either you deploy the actual physical artifact that you have just built on your machine or you programmatically build one for testing. To build one programmatically you use SkrinkWrap.

Getting Started

For those that already know, you will agree that generally when you are working with a standalone application server, in this case, WildFly, setting up Arquillian is quite a bit of work, but at least you set it up once and the rewards are out of this world. On the other side I have noticed over the past 6+- (since 2013) years that it has improved with all the dependencies one must configure and configuration files that one needs to create. I am really happy with what they have done with it in Thorntail and that's what we are going to look at for our Java EE Microservice.

Once again we will continue working on the previous Thorntail repo we have been working on since our first article, Java EE Micro Services Using Thorntail to the one that followed, which is, Dockerizing Your Java EE Thorntail Microservice.

Start up by opening your "pom.xml" file and adding the following dependency. In the case of Thorntail and the fact that it's already packed nicely for you the developers. You are basically installing or including a "Fraction".

<dependency>

<groupId>io.thorntail</groupId>

<artifactId>arquillian</artifactId>

<scope>test</scope>

</dependency>Part of the normal process with Arquillian is to also include JUnit dependenies / libraries, right? Ha ha ha ha, well this Thorntail already includes it. Currently it's including JUnit 4.12 so you may exclude it using Maven and rather include JUnit 5 if you want. For the purpose of getting you up and running with Thorntail I am just going to keep it as is. This fraction also includes Arquillian & ShrinkWrap libraries that you would have to configure separately, but not today! The next thing is to configure our maven testing plugin, the Maven Failsafe Plugin.

This plugin was desgined for integration testing which is exactly what we want since we want to integrate into our REST Service when testig and get real results.

<plugin>

<artifactId>maven-failsafe-plugin</artifactId>

<version>2.22.2</version>

<executions>

<execution>

<goals>

<goal>integration-test</goal>

<goal>verify</goal>

</goals>

</execution>

</executions>

</plugin>Test Case Implementation

Now let's get to the fun stuff, our test case implementation. So create a new Test Suite aka Test Class. Make sure that he name of the class ends with "IT" (which means Integration Test) because by default, Maven will look for classes that end with "IT" in order to run them as Integration Tests. For example I named mine "SampleResourceIT". So let's move on to some action ...

Add an annotation at class level as follows :

...

@RunWith( Arquillian.class)

public class SampleResourceIT { }

...You are instructing JUnit to run with Arquillian. Literally what's there. So this will not be a normal JUnit test case. You will notice that immediately after adding this annotation, you will have some errors already. This is because Arquillian now wants to know how you would like to package your artifact that it should deploy.

So then we should now add a new method with the @Deployment annotation. This annotation is from the Arquillian Framework. It is where we will build our artifact for Arquillian to deploy it.

...

@Deployment

public static Archive createDeployment() { }

...Now let's build our test ".war" file inside that method using the SkrinkWrap API.

...

@Deployment

public static Archive createDeployment() {

WebArchive webArtifact = ShrinkWrap.create( WebArchive.class, "thorntail-test-api.war");

webArtifact.addPackages( Boolean.TRUE, "za.co.anylytical.showcase");

webArtifact.addAsWebResource("project-defaults.yml");

// Print all file and included packages

System.out.println( webArtifact.toString(true));

return webArtifact;

}

...So now have built our small simple test web archive file. We are giving our war file a name. "thorntail-test-api.war", I believe you know that you can name it anything you want so you are not tied to naming it almost similar to the original file name. The next thing we are doing is include a our package which contains pretty much the our REST Service and it's business logic. So in a nutshell it contains our application java classes, in "za.co.anylytical.showcase" this follows a flag that tells ShrinkWrap to search recursively. The next part is about including any of our resource file that we may want to include. So I knwo that some of our actual REST configuations are in our file, "projet-defaults.yml", and to be as close as possible to our actual application we should include it in our test web archive file. Last part is printing everything that's in our file just to see what this test war file contains and be sure that we have everything that we want in there.

Great stuff. So now we want to write a test that just calls our test service and affirm that we managed to reach the Web Resource just fine.

...

@Test

public void test_That_We_Reach_Our_WebResource_Just_Fine_Yea() throws Exception {

Client client = ClientBuilder.newBuilder().build();

WebTarget target = client.target("http://localhost:8881/that-service/text");

Response response = target.request().get();

int statusCode = response.getStatusInfo().getStatusCode();

String reponseBody = response.readEntity(String.class);

assertEquals( 200, statusCode);

System.out.println("RESPONSE CODE : " + statusCode);

System.out.println("RESPONSE BODY : " + reponseBody);

}

...We have a simple test case that uses the standard JAX-RS 2.x Client API Client API, so no magic there. We build our client code and then call the REST Service we want to call and then validate that we get a HTTP Status Code 200 which means that things wet well. No issues, ZILCH!

These are my IntelliJ IDEA test run results. You can also go through with Maven as follows :

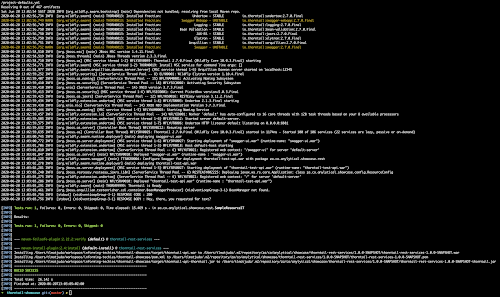

mvn clean install

This will perfom an build while running integration tests for us which should give you results like the ones in the image below :

If you pay close attention to this image above as you will also see that Arquillian started up Wildfly for us and also used ShrinkWrap to build a ".war" file and also deploy it for us, all done automatically. Together with this, JUnit kicked in our test case when Arquillian was done with the deploy. Now that's magic! KA-BOOM!

Full test case looks like :

@RunWith( Arquillian.class)

public class SampleResourceIT {

@Deployment

public static Archive createDeployment() {

WebArchive webArtifact = ShrinkWrap.create( WebArchive.class, "thorntail-test-api.war");

webArtifact.addPackages( Boolean.TRUE, "za.co.anylytical.showcase");

webArtifact.addAsWebResource("project-defaults.yml");

// Print all file and included packages

System.out.println( webArtifact.toString( true));

return webArtifact;

}

@Test

public void test_That_We_Reach_Our_WebResource_Just_Fine_Yea() throws Exception {

Client client = ClientBuilder.newBuilder().build();

WebTarget target = client.target("http://localhost:8881/that-service/text");

Response response = target.request().get();

int statusCode = response.getStatusInfo().getStatusCode();

String reponseBody = response.readEntity(String.class);

assertEquals( 200, statusCode);

System.out.println("RESPONSE CODE : " + statusCode);

System.out.println("RESPONSE BODY : " + reponseBody);

}

}As usual you may ...

Leave some comments below and you may checkout the GitHub Source Code to validate the steps.